Pantavisor Architecture¶

At a high level, Pantavisor is just in charge of two things: container orchestration and communication with the outside world.

Container Orchestration¶

The software that is running in a Pantavisor-enabled device at a certain moment is called a revision. A revision is composed by a BSP and a number of containers. Pantavisor is able to go from the current running revision to a new revision in a transactional manner.

Revisions, as well as other relevant data, are stored on-disk in the device to make them persistent.

Communication with the Outside World¶

Pantavisor-enabled devices need to communicate with the outside world to consume new updates, exchange metadata or send logs. Users can achieve this remotely as well as locally.

Customisation¶

Pantavisor can be set up in different ways, offering multi-level configuration as well as several operational init modes.

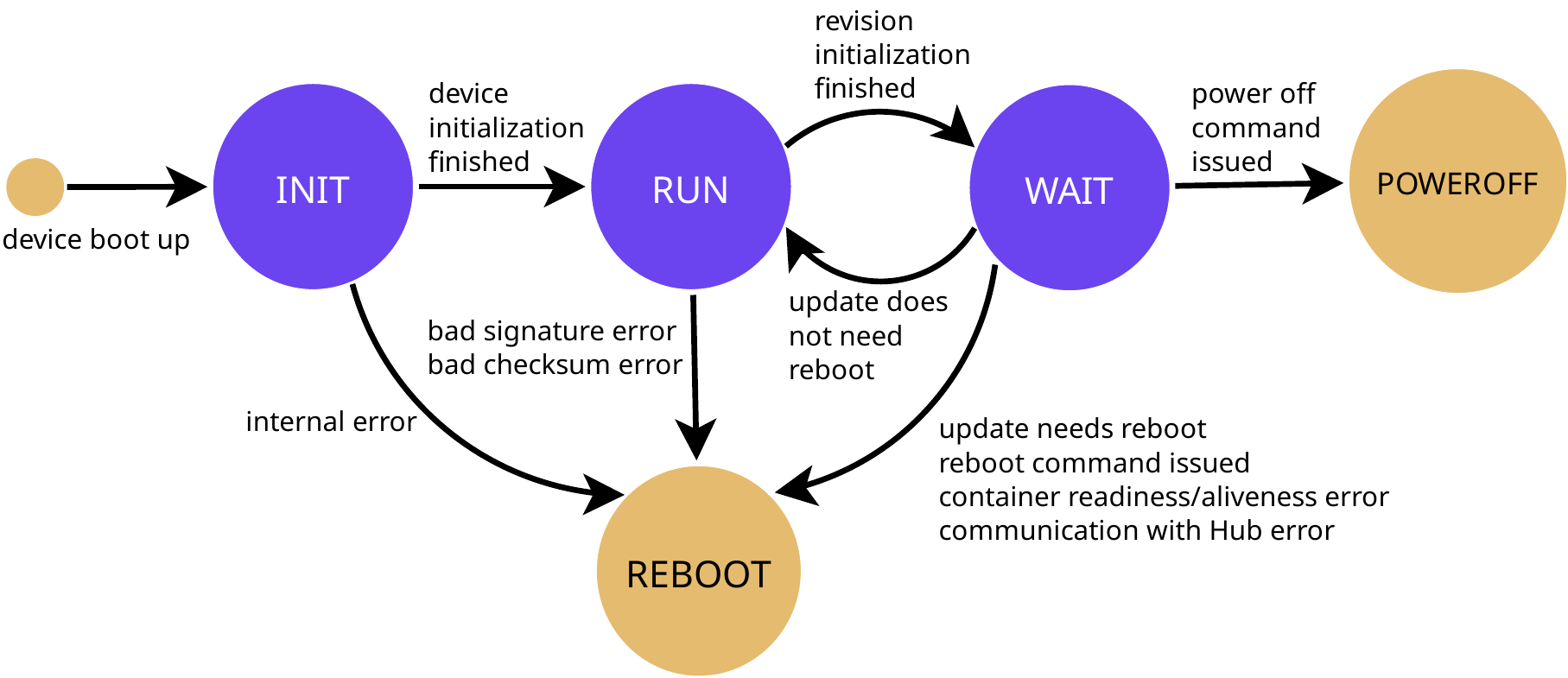

State Machine¶

To get a very simplified view on how Pantavisor works, you can take a look at its state machine:

Each state can be sumarized as:

- INIT:

- Prepare the base rootfs

- Prepare persistent storage

- Parse configuration

- Choose init mode

- Initialize interaction with bootloader

- Initialize log system

- Initialize control socket

- Initialize watchdog

- RUN:

- Initialize the running revision

- Check object checksum

- Check revision signatures

- WAIT:

- Start containers and check its statuses

- Manage any ongoing update, incluiding installation, verification, transition, etc.

- Run Pantacor Hub client full state machine

- Process control socket requests

- Manage metadata

- Check and run garbage collector